S3 Connector

Version 23.4.8839

Version 23.4.8839

S3 Connector

The S3 connector integrates with Amazon’s S3 (Simple Storage Service) and other S3-like services (such as Google Storage and Wasabi).

Overview

Each S3 connector can automatically upload to and download from a single S3 bucket.

Before you begin, you need an Amazon account with the appropriate credentials (or account credentials for the S3-like service you are using). Specify the upload and download paths in the bucket. The connector supports download filters by file name.

Connector Configuration

This section contains all of the configurable connector properties.

Settings Tab

Host Configuration

Settings related to the remote connection target.

- Connector Id The static, unique identifier for the connector.

- Connector Type Displays the connector name and a description of what it does.

- Connector Description An optional field to provide a free-form description of the connector and its role in the flow.

- Bucket Name The S3 bucket to poll or upload to.

Host Configuration

Additional settings related to the remote connection target.

- Service Use the dropdown to choose which service to connect to. Select Other to specify the base URL to use when connecting to the service.

- Region The Region where the specified Bucket Name is stored.

Account Settings

Settings related to the account with permission to access the configured Bucket Name.

- IAM Role Whether to use the attached IAM role to access S3. Only use this setting when CData Arc is hosted on an EC2 instance that has an IAM role attached. The IAM credentials replace the two Key options below.

- Access Key The Access Key account credential acquired from Amazon (or the S3-like service).

- Secret Key The Secret Key account credential acquired from Amazon (or the S3-like service).

- Assume Role ARN Use the two Key options above to call the Amazon STS service to obtain temporary credentials to access S3 with the provided role ARN.

TLS Settings

Settings related to TLS negotiation with the S3 server.

- TLS Check this to enable TLS negotiation.

- Server Public Certificate The public key certificate to trust when connecting to the S3 server. Set this to

Any Certificateto implicitly trust the server.

Upload

Settings related to the path in the specified bucket where files are uploaded.

- Prefix The remote path on the server where files are uploaded.

- Overwrite Action Whether to overwrite, skip, or fail existing files.

Download

Settings related to the path in the specified bucket where files are uploaded.

- Prefix The remote path on the server from where files are downloaded.

- File Filter A glob pattern filter to determine which files should be downloaded from the remote storage (for example, *.txt). You can use negative patterns to indicate files that should not be downloaded (for example, -*.tmp). Use this setting when you need multiple File Filter patterns. Multiple patterns can be separated by commas, with later filters taking priority except when an exact match is found.

- Delete Check this to delete successfully downloaded files from the remote storage.

Caching

Settings related to caching and comparing files between multiple downloads.

- File Size Comparison Check this to keep a record of downloaded file names and sizes. Previously downloaded files are skipped unless the file size is different than the last download.

- Timestamp Comparison Check this to keep a record of downloaded file names and last-modified timestamps. Previously downloaded files are skipped unless the timestamp is different than the last download.

Note: When you enable caching, the file names are case-insensitive. For example, the connector cannot distinguish between TEST.TXT and test.txt.

Automation Tab

Automation Settings

Settings related to the automatic processing of files by the connector.

- Upload Whether files arriving at the connector are automatically uploaded.

- Retry Interval The amount of time before a failed upload is retried.

- Max Attempts The maximum number of times the connector processes the input file. Success is measured based on a successful server acknowledgement. If this is set to 0, the connect retries the file indefinitely.

- Download Whether the connector should automatically poll the remote download path for files to download.

- Download Interval The interval between automatic download attempts.

- Minutes Past the Hour The minutes offset for an hourly schedule. Only applicable when the interval setting above is set to Hourly. For example, if this value is set to 5, the automation service downloads at 1:05, 2:05, 3:05, etc.

- Time The time of day that the attempt should occur. Only applicable when the interval setting above is set to Daily, Weekly, or Monthly.

- Day The day on which the attempt should occur. Only applicable when the interval setting above is set to Weekly or Monthly.

- Minutes The number of minutes to wait before attempting the download. Only applicable when the interval setting above is set to Minute.

- Cron Expression A five-position string representing a cron expression that determines when the attempt should occur. Only applicable when the interval setting above is set to Advanced.

Performance

Settings related to the allocation of resources to the connector.

- Max Workers The maximum number of worker threads consumed from the threadpool to process files on this connector. If set, this overrides the default setting on the Settings > Automation page.

- Max Files The maximum number of files sent by each thread assigned to the connector. If set, this overrides the default setting on the Settings > Automation page.

Alerts Tab

Settings related to configuring alerts and Service Level Agreements (SLAs).

Connector Email Settings

Before you can execute SLAs, you need to set up email alerts for notifications. Clicking Configure Alerts opens a new browser window to the Settings page where you can set up system-wide alerts. See Alerts for more information.

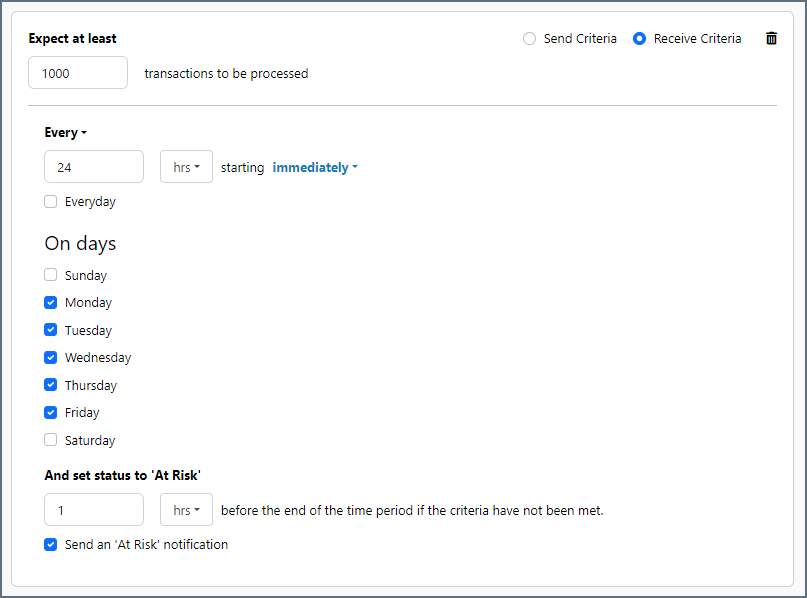

Service Level Agreement (SLA) Settings

SLAs enable you to configure the volume you expect connectors in your flow to send or receive, and to set the time frame in which you expect that volume to be met. CData Arc sends emails to warn the user when an SLA is not met, and marks the SLA as At Risk, which means that if the SLA is not met soon, it will be marked as Violated. This gives the user an opportunity to step in and determine the reasons the SLA is not being met, and to take appropriate actions. If the SLA is still not met at the end of the at-risk time period, the SLA is marked as violated, and the user is notified again.

To define an SLA, click Add Expected Volume Criteria.

- If your connector has separate send and receive actions, use the radio buttons to specify which direction the SLA pertains to.

- Set Expect at least to the minimum number of transactions (the volume) you expect to be processed, then use the Every fields to specify the time frame.

- By default, the SLA is in effect every day. To change that, uncheck Everyday then check the boxes for the days of the week you want.

- Use And set status to ‘At Risk’ to indicate when the SLA should be marked as at risk.

- By default, notifications are not sent until an SLA is in violation. To change that, check Send an ‘At Risk’ notification.

The following example shows an SLA configured for a connector that expects to receive 1000 files every day Monday-Friday. An at-risk notification is sent 1 hour before the end of the time period if the 1000 files have not been received.

Advanced Tab

Proxy Settings

These are a collection of settings that identify and authenticate to the proxy through which the S3 connection should be routed. By default, this section uses the global settings on the Settings Page. Clear the checkbox to supply settings specific to your connector.

- Proxy Type The protocol used by a proxy-based firewall.

- Proxy Host The name or IP address of a proxy-based firewall.

- Proxy Port The TCP port for a proxy-based firewall.

- Proxy User The user name to use to authenticate with a proxy-based firewall.

- Proxy Password A password used to authenticate to a proxy-based firewall.

- Authentication Scheme Leave the default None or choose from one of the following authentication schemes: Basic, Digest, Proprietary, or NTLM.

Advanced Settings

Settings not included in the previous categories.

- Access Policy The access policy set on objects after they are uploaded to the S3 server.

- Encryption Password If set, object data is encrypted on the client side before upload, and downloaded objects are automatically decrypted.

- Processing Delay The amount of time (in seconds) by which the processing of files placed in the Input folder is delayed. This is a legacy setting. Best practice is to use a File connector to manage local file systems instead of this setting.

- Recurse Whether to download files in subfolders of the target remote path.

- Local File Scheme A scheme for assigning filenames to messages that are output by the connector. You can use macros in your filenames dynamically to include information such as identifiers and timestamps. For more information, see Macros.

- Server Side Encryption Whether to use server-side AES256 encryption.

- Virtual Hosting Whether to use hosted-style or path-style requests when referencing the bucket endpoint.

Message

- Save to Sent Folder Check this to copy files processed by the connector to the Sent folder for the connector.

- Sent Folder Scheme Instructs the connector to group messages in the Sent folder according to the selected interval. For example, the Weekly option instructs the connector to create a new subfolder each week and store all messages for the week in that folder. The blank setting tells the connector to save all messages directly in the Sent folder. For connectors that process many messages, using subfolders helps keep messsages organized and improves performance.

Logging

- Log Level The verbosity of logs generated by the connector. When you request support, set this to Debug.

- Log Subfolder Scheme Instructs the connector to group files in the Logs folder according to the selected interval. For example, the Weekly option instructs the connector to create a new subfolder each week and store all logs for the week in that folder. The blank setting tells the connector to save all logs directly in the Logs folder. For connectors that process many transactions, using subfolders helps keep logs organized and improves performance.

- Log Messages Check this to have the log entry for a processed file include a copy of the file itself. If you disable this, you might not be able to download a copy of the file from the Input or Output tabs.

Miscellaneous

Miscellaneous settings are for specific use cases.

- Other Settings Enables you to configure hidden connector settings in a semicolon-separated list (for example,

setting1=value1;setting2=value2). Normal connector use cases and functionality should not require the use of these settings.

Establishing a Connection

The requirements for establishing an S3 connection are simple:

- Amazon account credentials (or other S3-like account credentials)

- Access Key

- Secret Key

- A bucket that can be accessed by the above account

For Amazon S3, use this link to obtain Access Key and Secret Key information from Amazon.

Optionally, you can secure the connection with S3 servers with TLS by enabling the Use TLS option in the TLS Settings section.

Uploading

Upload to Remote Folders

The Prefix setting in the Upload section of the Settings page specifies the bucket path to upload files to. This allows for the logical separation of files into virtual folders in the same bucket.

Note: S3 servers do not maintain a real folder structure, and Arc uses application logic to present a pseudo folder structure. Slashes in the Prefix (/, \\) are interpreted as representing a folder hierarchy. This allows for uploading to or downloading from ‘subfolders’ in the bucket based on the slashes in the path.

Upload Automation

The S3 connector supports automatic upload via the Automation tab. When Upload automation is enabled, files that reach the Input folder for the connector are automatically uploaded to the specified Bucket Name at the specified Prefix.

If a file fails to upload, the application attempts to send it again after the Retry Interval has elapsed. This process continues until the Max Attempts has been reached, after which the connector raises an error.

Downloading

Download from Remote Folders

The Prefix setting in the Download section of the Settings specifies the bucket path to upload files to. This allows for the logical separation of files into virtual folders in the same bucket.

The File Filter setting provides a way to only download specific filenames in the specified path.

Note: S3 servers do not maintain a real folder structure, and Arc uses application logic to present a pseudo folder structure. Slashes in the Prefix (/, \\) are interpreted as representing a folder hierarchy. This allows for uploading to or downloading from ‘subfolders’ in the bucket based on the slashes in the path.

Download Automation

The S3 connector supports automatic upload via the Automation tab. When Download automation is enabled, the connector automatically polls the remote bucket based on the specified Download Interval.

Macros

Using macros in file naming strategies can enhance organizational efficiency and contextual understanding of data. By incorporating macros into filenames, you can dynamically include relevant information such as identifiers, timestamps, and header information, providing valuable context to each file. This helps ensure that filenames reflect details important to your organization.

CData Arc supports these macros, which all use the following syntax: %Macro%.

| Macro | Description |

|---|---|

| ConnectorID | Evaluates to the ConnectorID of the connector. |

| Ext | Evaluates to the file extension of the file currently being processed by the connector. |

| Filename | Evaluates to the filename (extension included) of the file currently being processed by the connector. |

| FilenameNoExt | Evaluates to the filename (without the extension) of the file currently being processed by the connector. |

| MessageId | Evaluates to the MessageId of the message being output by the connector. |

| RegexFilename:pattern | Applies a RegEx pattern to the filename of the file currently being processed by the connector. |

| Header:headername | Evaluates to the value of a targeted header (headername) on the current message being processed by the connector. |

| LongDate | Evaluates to the current datetime of the system in long-handed format (for example, Wednesday, January 24, 2024). |

| ShortDate | Evaluates to the current datetime of the system in a yyyy-MM-dd format (for example, 2024-01-24). |

| DateFormat:format | Evaluates to the current datetime of the system in the specified format (format). See Sample Date Formats for the available datetime formats |

| Vault:vaultitem | Evaluates to the value of the specified vault item. |

Examples

Some macros, such as %Ext% and %ShortDate%, do not require an argument, but others do. All macros that take an argument use the following syntax: %Macro:argument%

Here are some examples of the macros that take an argument:

- %Header:headername%: Where

headernameis the name of a header on a message. - %Header:mycustomheader% resolves to the value of the

mycustomheaderheader set on the input message. - %Header:ponum% resolves to the value of the

ponumheader set on the input message. - %RegexFilename:pattern%: Where

patternis a regex pattern. For example,%RegexFilename:^([\w][A-Za-z]+)%matches and resolves to the first word in the filename and is case insensitive (test_file.xmlresolves totest). - %Vault:vaultitem%: Where

vaultitemis the name of an item in the vault. For example,%Vault:companyname%resolves to the value of thecompanynameitem stored in the vault. - %DateFormat:format%: Where

formatis an accepted date format (see Sample Date Formats for details). For example,%DateFormat:yyyy-MM-dd-HH-mm-ss-fff%resolves to the date and timestamp on the file.

You can also create more sophisticated macros, as shown in the following examples:

- Combining multiple macros in one filename:

%DateFormat:yyyy-MM-dd-HH-mm-ss-fff%%EXT% - Including text outside of the macro:

MyFile_%DateFormat:yyyy-MM-dd-HH-mm-ss-fff% - Including text within the macro:

%DateFormat:'DateProcessed-'yyyy-MM-dd_'TimeProcessed-'HH-mm-ss%