Oracle Connector Setup

Version 23.4.8839

Version 23.4.8839

Oracle Connector Setup

The Oracle connector allows you to integrate Oracle into your data flow by pushing or pulling data from Oracle. Follow the steps below to connect CData Arc to Oracle.

Configuring Dependencies

To use Oracle in your flows, you must first install and configure the dependencies that Oracle requires. Follow the directions below for your version of Arc.

.NET Edition

-

Open the Arc installation directory (by default,

C:\Program Files\CData\CData Arc). -

Open the

wwwfolder. -

Open the Web.Config file in a text or code editor. This is an XML-formatted file.

-

Navigate to the element

<configuration>/<system.data>/<DbProviderFactories>. -

At the bottom of this element, add the following text:

<remove invariant="Oracle.ManagedDataAccess.Client" /> <add name="ODP.NET, Managed Driver" invariant="Oracle.ManagedDataAccess.Client" description="Oracle Data Provider for .NET, Managed Driver" type="Oracle.ManagedDataAccess.Client.OracleClientFactory, Oracle.ManagedDataAccess" /> -

Save the Web.Config file and close it.

-

Download the Oracle ODAC .dll files from the Oracle website. Choose the option titled ODP.NET_Managed_ODACxxxx.zip where the xxxx represents the version in the file name.

Note: Access to this download requires an Oracle account.

-

Extract the files to a folder. Copy the files in the table below to their corresponding subfolders in the Arc installation directory.

| File Name | Relative Path to Copy to |

|---|---|

| Oracle.ManagedDataAccess.dll | \bin |

| Oracle.ManagedDataAccessDTC.dll (x64 version) | \bin\x64 |

| Oracle.ManagedDataAccessIOP.dll (x64 version) | \bin\x64 |

| Oracle.ManagedDataAccessDTC.dll (x86 version) | \bin\x86 |

| Oracle.ManagedDataAccessIOP.dll (x86 version) | \bin\x86 |

Java Edition

-

Download the Oracle JDBC .jar file from the Oracle website.

-

If you are using the Arc embedded Jetty server, select the latest release of ojdbc11.jar.

-

If you are using another hosting option, select the latest release of either ojdbc11.jar or ojdbc8.jar, depending on the version of JDK your hosting server uses.

-

-

Move the file to the appropriate location:

-

If you are using the embedded Jetty server, move the file to the

libfolder in the Arc installation directory ([Arc]/lib). If this folder does not exist, create it. -

If you are using another server, move the file to the

libfolder for the server. If this folder does not exist, create it.

-

Establish a Connection

To allow Arc to use data from Oracle, you must first establish a connection to Oracle. There are two ways to establish this connection:

- Add a Oracle connector to your flow. Then, in the settings pane, click Create next to the Connection drop-down list.

- Open the Arc Settings page, then open the Connections tab. Click Add, select Oracle, and click Next.

Note:

- The login process is only required the first time the connection is created.

- Connections to Oracle can be re-used across multiple Oracle connectors.

Enter Connection Settings

After opening a new connection dialogue, follow these steps:

-

Provide the requested information:

-

Name—the static name of the connection. Set this as desired.

-

Type—this is always set to Oracle.

-

Connection Type—the connection type (SID or Service Name) to use.

-

User—the username to use for logging in.

-

Password—the password for the user entered above.

-

Server—the address of the Oracle server you want to connect to.

-

Port—the port to use when connecting to the server.

-

SID/Service—the SID or Service (depending on Connection Type chosen) to use when connecting.

-

Other—other authentication information if needed.

-

-

If needed, click Advanced to open the drop-down menu of advanced connection settings. These should not be needed in most cases.

-

Click Test Connection to ensure that Arc can connect to Oracle with the provided information. If an error occurs, check all fields and try again.

-

Click Add Connection to finalize the connection.

-

In the Connection drop-down list of the connector configuration pane, select the newly-created connection.

-

Click Save Changes.

Note: There are also data source-specific authentication and configuration options on the Advanced tab. They are not all described in this documentation, but you can find detailed information for your data source on the Online Help Files page of the CData website.

Select an Action

After establishing a connection to Oracle, you must choose the action that the Oracle connector will perform. The table below outlines each action and where it belongs in an Arc flow.

| Action | Description | Position in Flow |

|---|---|---|

| Upsert | Inserts or updates Oracle data. By default, if a record already exists in Oracle, an update is performed on the existing data in Oracle using the values provided from the input. | End |

| Lookup | Retrieves values from Oracle and inserts those values into an already-existing Arc message in the flow. The Lookup Query determines what value the connector will retrieve from Oracle. It should be formatted as a SQL query against the Oracle tables. |

Middle |

| Lookup Stored Procedure | Treats data coming into the connector as input for a stored procedure, then inserts the result into an existing Arc message in the flow. You can click the Show Sample Data button in the Test Lookup modal to provide sample inputs to the selected Stored Procedure and preview the results. |

Middle |

| Select | Retrieves data from Oracle and brings it into Arc. You can use the Filter panel to add filters to the Select. These filters function similarly to WHERE clauses in SQL. |

Beginning |

| Execute Stored Procedures | Treats data coming into the connector as input for a stored procedure, then passes the result down the flow. You can click the Show Sample Data button in the Test Execute Stored Procedure modal to provide sample inputs to the selected Stored Procedure and preview the results. |

Middle |

Automation Tab

Automation Settings

Settings related to the automatic processing of files by the connector.

- Send Whether files arriving at the connector are automatically sent.

- Retry Interval The number of minutes before a failed send is retried.

- Max Attempts The maximum number of times the connector processes the file. Success is measured based on a successful server acknowledgement. If you set this to 0, the connector retries the file indefinitely.

- Receive Whether the connector should automatically query the data source.

- Receive Interval The interval between automatic query attempts.

- Minutes Past the Hour The minutes offset for an hourly schedule. Only applicable when the interval setting above is set to Hourly. For example, if this value is set to 5, the automation service downloads at 1:05, 2:05, 3:05, etc.

- Time The time of day that the attempt should occur. Only applicable when the interval setting above is set to Daily, Weekly, or Monthly.

- Day The day on which the attempt should occur. Only applicable when the interval setting above is set to Weekly or Monthly.

- Minutes The number of minutes to wait before attempting the download. Only applicable when the interval setting above is set to Minute.

- Cron Expression A five-position string representing a cron expression that determines when the attempt should occur. Only applicable when the interval setting above is set to Advanced.

Performance

Settings related to the allocation of resources to the connector.

- Max Workers The maximum number of worker threads consumed from the threadpool to process files on this connector. If set, this overrides the default setting on the Settings > Automation page.

- Max Files The maximum number of files sent by each thread assigned to the connector. If set, this overrides the default setting on the Settings > Automation page.

Alerts Tab

Settings related to configuring alerts and Service Level Agreements (SLAs).

Connector Email Settings

Before you can execute SLAs, you need to set up email alerts for notifications. Clicking Configure Alerts opens a new browser window to the Settings page where you can set up system-wide alerts. See Alerts for more information.

Service Level Agreement (SLA) Settings

SLAs enable you to configure the volume you expect connectors in your flow to send or receive, and to set the time frame in which you expect that volume to be met. CData Arc sends emails to warn the user when an SLA is not met, and marks the SLA as At Risk, which means that if the SLA is not met soon, it will be marked as Violated. This gives the user an opportunity to step in and determine the reasons the SLA is not being met, and to take appropriate actions. If the SLA is still not met at the end of the at-risk time period, the SLA is marked as violated, and the user is notified again.

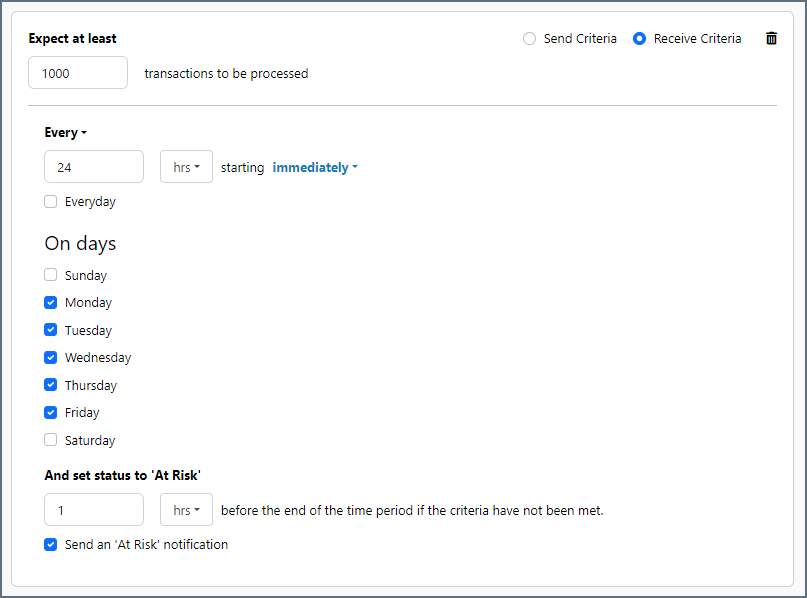

To define an SLA, click Add Expected Volume Criteria.

- If your connector has separate send and receive actions, use the radio buttons to specify which direction the SLA pertains to.

- Set Expect at least to the minimum number of transactions (the volume) you expect to be processed, then use the Every fields to specify the time frame.

- By default, the SLA is in effect every day. To change that, uncheck Everyday then check the boxes for the days of the week you want.

- Use And set status to ‘At Risk’ to indicate when the SLA should be marked as at risk.

- By default, notifications are not sent until an SLA is in violation. To change that, check Send an ‘At Risk’ notification.

The following example shows an SLA configured for a connector that expects to receive 1000 files every day Monday-Friday. An at-risk notification is sent 1 hour before the end of the time period if the 1000 files have not been received.

Advanced Tab

Many of the settings on the Advanced tab are dynamically loaded from the data source you are working with, so they are not covered in this documentation. You can find detailed information for your data source on the Online Help Files page of the CData website.

The options described below are available for all data sources.

Message

- Save to Sent Folder Check this to copy files processed by the connector to the Sent folder for the connector.

- Sent Folder Scheme Instructs the connector to group messages in the Sent folder according to the selected interval. For example, the Weekly option instructs the connector to create a new subfolder each week and store all messages for the week in that folder. The blank setting tells the connector to save all messages directly in the Sent folder. For connectors that process many messages, using subfolders helps keep messsages organized and improves performance.

Advanced Settings

Advanced settings are common settings for connectors that rely on arbitrary database drivers (for example, Database, CData, and API connectors) in order to make connections to various data sources.

- Auto Truncate When enabled, the connector automatically truncates any string or binary column values that are longer than the allowed limit.

- Command Timeout The command execution timeout duration in seconds.

- Last Inserted Id Query Provide a query to execute to retrieve the auto-increased Id for the last inserted record.

- Log Level The verbosity of logs generated by the connector. When you request support, set this to Debug.

- Local File Scheme A scheme for assigning filenames to messages that are output by the connector. You can use macros in your filenames dynamically to include information such as identifiers and timestamps. For more information, see Macros.

- Log Messages When checked, the connector keeps a copy of the message next to the logs for the message in the Logs directory. If you disable this, you might not be able to download a copy of the file from the Input or Output tabs.

- Max Failed Records The maximum number of records that are allowed to fail during insertion to allow processing to continue. The default value of 0 means that any errors cause the input message to be marked as an Error, and any uncommitted transactions are rolled back. A value of -1 means that all errors are ignored and the connector continues attempting to insert subsequent records. A positive value means that the connector continues attempting to insert records until the threshold is reached.

- Output File Format The format in which output data is represented. The default value (XML) causes the connector to output an XML file for every record that is processed, while optionally combining multiple records into a single data structure (depending on the value of Max Records). The CSV and TSV options output data in their respective file formats. This option is not available for complex table structures that include child tables.

- Process Changes Interval Unit Applicable to Select action only. When Use column columnname for processing new or changed records is checked in the Advanced portion of the Select Configuration section of the Settings tab, this controls how to interpret the Process Changes Interval setting (for example, Hours, Days, or Weeks). See Only Process New or Changed Records for details on this and the following two settings.

- Process Changes Interval When Use column columnname for processing new or changed records is checked on the Select Configuration section of the Settings tab, this controls how much historical data Arc attempts to process on the first attempt. For example, keeping the default of 180 (Days) means Arc only attempts to process data that has been created or modified in the last 180 days.

- Reset History Resets the cache stored when Use column columnname for processing new or changed records is checked.

- Batch Input Size The maximum number of queries in a batch if batches are supported by the data source.

- Batch Size The maximum number of batch messages in a batch group.

- Max Records The maximum number of records to include in a single output message. Use -1 to indicate that all output records should be put in a single file, and use 0 to indicate that the connector can decide based on the configured Output File Format. By default, XML outputs one record per file, and flat file formats include all records in one file.

- Transaction Size The maximum number of queries in a transaction.

- Processing Delay The amount of time (in seconds) by which the processing of files placed in the Input folder is delayed. This is a legacy setting. Best practice is to use a File connector to manage local file systems instead of this setting.

- Log Subfolder Scheme Instructs the connector to group files in the

Logsfolder according to the selected interval. For example, the Weekly option instructs the connector to create a new subfolder each week and store all logs for the week in that folder. The blank setting tells the connector to save all logs directly in theLogsfolder. For connectors that process many transactions, using subfolders can help keep logs organized and improve performance.

Miscellaneous

Miscellaneous settings are for specific use cases.

- Other Settings Enables you to configure hidden connector settings in a semicolon-separated list (for example,

setting1=value1;setting2=value2). Normal connector use cases and functionality should not require the use of these settings.

Macros

Using macros in file naming strategies can enhance organizational efficiency and contextual understanding of data. By incorporating macros into filenames, you can dynamically include relevant information such as identifiers, timestamps, and header information, providing valuable context to each file. This helps ensure that filenames reflect details important to your organization.

CData Arc supports these macros, which all use the following syntax: %Macro%.

| Macro | Description |

|---|---|

| ConnectorID | Evaluates to the ConnectorID of the connector. |

| Ext | Evaluates to the file extension of the file currently being processed by the connector. |

| Filename | Evaluates to the filename (extension included) of the file currently being processed by the connector. |

| FilenameNoExt | Evaluates to the filename (without the extension) of the file currently being processed by the connector. |

| MessageId | Evaluates to the MessageId of the message being output by the connector. |

| RegexFilename:pattern | Applies a RegEx pattern to the filename of the file currently being processed by the connector. |

| Header:headername | Evaluates to the value of a targeted header (headername) on the current message being processed by the connector. |

| LongDate | Evaluates to the current datetime of the system in long-handed format (for example, Wednesday, January 24, 2024). |

| ShortDate | Evaluates to the current datetime of the system in a yyyy-MM-dd format (for example, 2024-01-24). |

| DateFormat:format | Evaluates to the current datetime of the system in the specified format (format). See Sample Date Formats for the available datetime formats |

| Vault:vaultitem | Evaluates to the value of the specified vault item. |

| Table | Evaluates to the name of the table where the connector is selecting data from. |

| PK | Evaluates to the primary key value of the received record from the table. |

| Sequence | Evaluates to a four digit number based on how many total records were received by the connector (for example, %Sequence% evaluates to 0005 for the fifth record returned). |

| FileFormat | Evaluates to the output file format specified on the connector’s Advanced tab (XML, CSV, or TSV). |

Examples

Some macros, such as %Ext% and %ShortDate%, do not require an argument, but others do. All macros that take an argument use the following syntax: %Macro:argument%

Here are some examples of the macros that take an argument:

- %Header:headername%: Where

headernameis the name of a header on a message. - %Header:mycustomheader% resolves to the value of the

mycustomheaderheader set on the input message. - %Header:ponum% resolves to the value of the

ponumheader set on the input message. - %RegexFilename:pattern%: Where

patternis a regex pattern. For example,%RegexFilename:^([\w][A-Za-z]+)%matches and resolves to the first word in the filename and is case insensitive (test_file.xmlresolves totest). - %Vault:vaultitem%: Where

vaultitemis the name of an item in the vault. For example,%Vault:companyname%resolves to the value of thecompanynameitem stored in the vault. - %DateFormat:format%: Where

formatis an accepted date format (see Sample Date Formats for details). For example,%DateFormat:yyyy-MM-dd-HH-mm-ss-fff%resolves to the date and timestamp on the file.

You can also create more sophisticated macros, as shown in the following examples:

- Combining multiple macros in one filename:

%DateFormat:yyyy-MM-dd-HH-mm-ss-fff%%EXT% - Including text outside of the macro:

MyFile_%DateFormat:yyyy-MM-dd-HH-mm-ss-fff% - Including text within the macro:

%DateFormat:'DateProcessed-'yyyy-MM-dd_'TimeProcessed-'HH-mm-ss%