Databricks Connector Setup

Version 23.4.8841

Version 23.4.8841

Databricks Connector Setup

The Databricks connector allows you to integrate Databricks into your data flow by pushing or pulling data from Databricks. Follow the steps below to connect CData Arc to Databricks.

Establish a Connection

To allow Arc to use data from Databricks, you must first establish a connection to Databricks. There are two ways to establish this connection:

- Add a Databricks connector to your flow. Then, in the settings pane, click Create next to the Connection drop-down list.

- Open the Arc Settings page, then open the Connections tab. Click Add, select Databricks, and click Next.

Note:

- The login process is only required the first time the connection is created.

- Connections to Databricks can be re-used across multiple Databricks connectors.

Enter Connection Settings

After opening a new connection dialogue, follow these steps:

- Enter the connection information:

- Name — The static name of the connection.

- Type — This is always set to Databricks.

- Auth Scheme — The authentication scheme to use for the connection. Options are Personal Access Token and Azure Service Principal.

- Create the connection according to your selected Auth scheme:

- Personal Access Token — Enter the following information:

- Server — The host name or IP address of the server hosting Databricks.

- HTTP Path — To be written

- Access Token — Your Databricks access token.

- Azure Service Principal — Enter the following information:

- Server — The host name or IP address of the server hosting Databricks.

- HTTP Path — To be written

- AzureTenantId — The tenant Id of your Microsoft Azure Active Directory.

- AzureClientId — The application (client) Id of your Microsoft Azure Active Directory application.

- Azure Client Secret — The application (client) secret of your Microsoft Azure Active Directory application.

- Azure Subscription Id — The subscription Id of your Microsoft Azure Databricks Service Workspace.

- Azure Resource Group — The resource group name of your Microsoft Azure Databricks Service workspace.

- Azure Workspace — The name of your Microsoft Azure Databricks Service Workspace.

- Personal Access Token — Enter the following information:

-

If necessary, click Advanced to open the drop-down menu of advanced connection settings. These settings should not be needed in most cases.

-

Click Test Connection to ensure that Arc can connect to Databricks with the provided information. If an error occurs, check all fields and try again.

-

Click Add Connection to finalize the connection.

-

In the Connection drop-down list of the connector configuration pane, select the newly-created connection.

- Click Save Changes.

Note:また、高度な設定タブには、データソース固有の認証および設定オプションがあります。このドキュメントではすべてが説明されているわけではありませんが、各データソースに関する詳細情報はCData Web サイトの製品ドキュメントページで見つけることができます。

アクションを選択する

Databricks への接続を確立したら、Databricks コネクタが実行するアクションを選択する必要があります。以下のテーブルは、各アクションの概要とそれがArc フローのどこに属するかを示しています。

| アクション | 説明 | フローにおけるポジション |

|---|---|---|

| Upsert | Databricks データを挿入または更新します。デフォルトでは、Databricks にすでにレコードが存在する場合は、入力から提供された値を使用してDatabricks の既存のデータに対して更新が実行されます。 | End |

| Lookup | Databricks から値を取得し、それらの値をフロー内の既存のArc メッセージに挿入します。 ルックアップクエリは、コネクタがDatabricks から取得する値を決定します。これは、Databricks テーブルに対するSQL クエリとしてフォーマットする必要があります。 |

Middle |

| Lookup Stored Procedure | コネクタに入力されたデータをストアドプロシージャの入力として扱い、結果をフロー内の既存のArc メッセージに挿入します。 Lookup をテストモーダルにあるサンプルデータを表示ボタンをクリックすると、選択したストアドプロシージャにサンプル入力を提供し、結果をプレビューできます。 |

Middle |

| Select | Databricks からデータを取得し、それをArc に取り込みます。 フィルタパネルを使用して、Select にフィルタを追加できます。これらのフィルタは、SQL の WHERE 句と同じように機能します。 |

Beginning |

| Execute Stored Procedures | コネクタに入力されたデータをストアドプロシージャの入力として扱い、結果をフローに渡します。 Execute Stored Procedure をテストモーダルにあるサンプルデータを表示ボタンをクリックすると、選択したストアドプロシージャにサンプル入力を提供し、結果をプレビューできます。 |

Middle |

Automation Tab

Automation Settings

Settings related to the automatic processing of files by the connector.

- Send Whether files arriving at the connector are automatically sent.

- Retry Interval The number of minutes before a failed send is retried.

- Max Attempts The maximum number of times the connector processes the file. Success is measured based on a successful server acknowledgement. If you set this to 0, the connector retries the file indefinitely.

- Receive Whether the connector should automatically query the data source.

- Receive Interval The interval between automatic query attempts.

- Minutes Past the Hour The minutes offset for an hourly schedule. Only applicable when the interval setting above is set to Hourly. For example, if this value is set to 5, the automation service downloads at 1:05, 2:05, 3:05, etc.

- Time The time of day that the attempt should occur. Only applicable when the interval setting above is set to Daily, Weekly, or Monthly.

- Day The day on which the attempt should occur. Only applicable when the interval setting above is set to Weekly or Monthly.

- Minutes The number of minutes to wait before attempting the download. Only applicable when the interval setting above is set to Minute.

- Cron Expression A five-position string representing a cron expression that determines when the attempt should occur. Only applicable when the interval setting above is set to Advanced.

Performance

Settings related to the allocation of resources to the connector.

- Max Workers The maximum number of worker threads consumed from the threadpool to process files on this connector. If set, this overrides the default setting on the Settings > Automation page.

- Max Files The maximum number of files sent by each thread assigned to the connector. If set, this overrides the default setting on the Settings > Automation page.

アラートタブ

アラートとサービスレベル(SLA)の設定に関連する設定.

コネクタのE メール設定

サービスレベル(SLA)を実行する前に、通知用のE メールアラートを設定する必要があります。アラートを設定をクリックすると、新しいブラウザウィンドウで設定ページが開き、システム全体のアラートを設定することができます。詳しくは、アラートを参照してください。

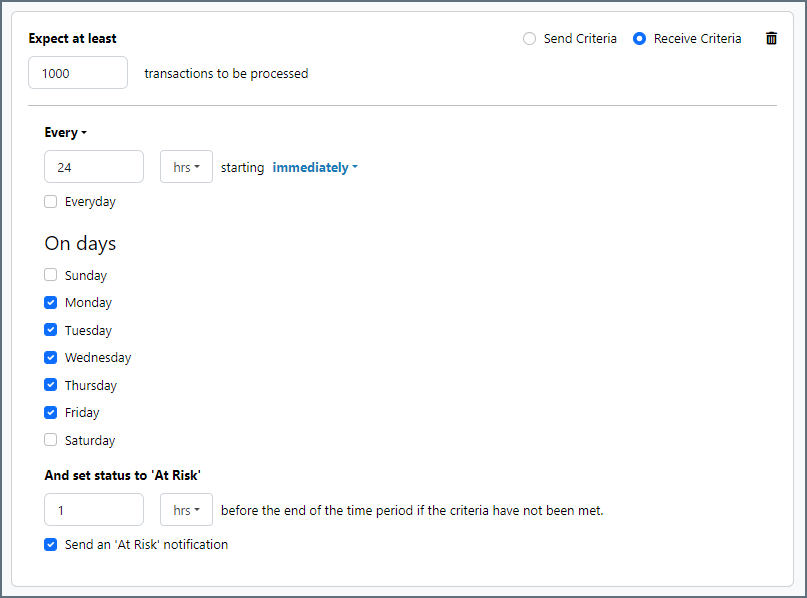

サービスレベル(SLA)の設定

サービスレベルでは、フロー内のコネクタが送受信すると予想される処理量を設定し、その量が満たされると予想される時間枠を設定できます。CData Arc は、サービスレベルが満たされていない場合にユーザーに警告するE メールを送信し、SLA を At Risk(危険) としてマークします。これは、サービスレベルがすぐに満たされない場合に Violated(違反) としてマークされることを意味します。これにより、ユーザーはサービスレベルが満たされていない理由を特定し、適切な措置を講じることができます。At Risk の期間内にサービスレベルが満たされなかった場合、SLA はViolated としてマークされ、ユーザーに再度通知されます。

サービスレベルを定義するには、予想処理量の条件を追加をクリックします。

- コネクタに個別の送信アクションと受信アクションがある場合は、ラジオボタンを使用してSLA に関連する方向を指定します。

- 検知基準(最小)を、処理が予想されるトランザクションの最小値(量)に設定し、毎フィールドを使用して期間を指定します。

- デフォルトでは、SLA は毎日有効です。これを変更するには、毎日のチェックをOFF にし、希望する曜日のチェックをON にします。

- 期間終了前にステータスを’At Risk’ に設定するタイミングを使用して、SLA がAt Risk としてマークされるようにします。

- デフォルトでは、通知はSLA が違反のステータスになるまで送信されません。これを変更するには、‘At Risk’ 通知を送信のチェックをON にします。

次の例は、月曜日から金曜日まで毎日1000ファイルを受信すると予想されるコネクタに対して構成されたSLA を示しています。1000ファイルが受信されていない場合、期間終了の1時間前にAt Risk 通知が送信されます。

Advanced Tab

Many of the settings on the Advanced tab are dynamically loaded from the data source you are working with, so they are not covered in this documentation. You can find detailed information for your data source on the Online Help Files page of the CData website.

The options described below are available for all data sources.

Message

- Save to Sent Folder Check this to copy files processed by the connector to the Sent folder for the connector.

- Sent Folder Scheme Instructs the connector to group messages in the Sent folder according to the selected interval. For example, the Weekly option instructs the connector to create a new subfolder each week and store all messages for the week in that folder. The blank setting tells the connector to save all messages directly in the Sent folder. For connectors that process many messages, using subfolders helps keep messsages organized and improves performance.

Advanced Settings

Advanced settings are common settings for connectors that rely on arbitrary database drivers (for example, Database, CData, and API connectors) in order to make connections to various data sources.

- Auto Truncate When enabled, the connector automatically truncates any string or binary column values that are longer than the allowed limit.

- Command Timeout The command execution timeout duration in seconds.

- Last Inserted Id Query Provide a query to execute to retrieve the auto-increased Id for the last inserted record.

- Log Level The verbosity of logs generated by the connector. When you request support, set this to Debug.

- Local File Scheme A scheme for assigning filenames to messages that are output by the connector. You can use macros in your filenames dynamically to include information such as identifiers and timestamps. For more information, see Macros.

- Log Messages When checked, the connector keeps a copy of the message next to the logs for the message in the Logs directory. If you disable this, you might not be able to download a copy of the file from the Input or Output tabs.

- Max Failed Records The maximum number of records that are allowed to fail during insertion to allow processing to continue. The default value of 0 means that any errors cause the input message to be marked as an Error, and any uncommitted transactions are rolled back. A value of -1 means that all errors are ignored and the connector continues attempting to insert subsequent records. A positive value means that the connector continues attempting to insert records until the threshold is reached.

- Output File Format The format in which output data is represented. The default value (XML) causes the connector to output an XML file for every record that is processed, while optionally combining multiple records into a single data structure (depending on the value of Max Records). The CSV and TSV options output data in their respective file formats. This option is not available for complex table structures that include child tables.

- Process Changes Interval Unit Applicable to Select action only. When Use column columnname for processing new or changed records is checked in the Advanced portion of the Select Configuration section of the Settings tab, this controls how to interpret the Process Changes Interval setting (for example, Hours, Days, or Weeks). See Only Process New or Changed Records for details on this and the following two settings.

- Process Changes Interval When Use column columnname for processing new or changed records is checked on the Select Configuration section of the Settings tab, this controls how much historical data Arc attempts to process on the first attempt. For example, keeping the default of 180 (Days) means Arc only attempts to process data that has been created or modified in the last 180 days.

- Reset History Resets the cache stored when Use column columnname for processing new or changed records is checked.

- Batch Input Size The maximum number of queries in a batch if batches are supported by the data source.

- Batch Size The maximum number of batch messages in a batch group.

- Max Records The maximum number of records to include in a single output message. Use -1 to indicate that all output records should be put in a single file, and use 0 to indicate that the connector can decide based on the configured Output File Format. By default, XML outputs one record per file, and flat file formats include all records in one file.

- Transaction Size The maximum number of queries in a transaction.

- Processing Delay The amount of time (in seconds) by which the processing of files placed in the Input folder is delayed. This is a legacy setting. Best practice is to use a File connector to manage local file systems instead of this setting.

- Log Subfolder Scheme Instructs the connector to group files in the

Logsfolder according to the selected interval. For example, the Weekly option instructs the connector to create a new subfolder each week and store all logs for the week in that folder. The blank setting tells the connector to save all logs directly in theLogsfolder. For connectors that process many transactions, using subfolders can help keep logs organized and improve performance.

Miscellaneous

Miscellaneous settings are for specific use cases.

- Other Settings Enables you to configure hidden connector settings in a semicolon-separated list (for example,

setting1=value1;setting2=value2). Normal connector use cases and functionality should not require the use of these settings.

Macros

Using macros in file naming strategies can enhance organizational efficiency and contextual understanding of data. By incorporating macros into filenames, you can dynamically include relevant information such as identifiers, timestamps, and header information, providing valuable context to each file. This helps ensure that filenames reflect details important to your organization.

CData Arc supports these macros, which all use the following syntax: %Macro%.

| Macro | Description |

|---|---|

| ConnectorID | Evaluates to the ConnectorID of the connector. |

| Ext | Evaluates to the file extension of the file currently being processed by the connector. |

| Filename | Evaluates to the filename (extension included) of the file currently being processed by the connector. |

| FilenameNoExt | Evaluates to the filename (without the extension) of the file currently being processed by the connector. |

| MessageId | Evaluates to the MessageId of the message being output by the connector. |

| RegexFilename:pattern | Applies a RegEx pattern to the filename of the file currently being processed by the connector. |

| Header:headername | Evaluates to the value of a targeted header (headername) on the current message being processed by the connector. |

| LongDate | Evaluates to the current datetime of the system in long-handed format (for example, Wednesday, January 24, 2024). |

| ShortDate | Evaluates to the current datetime of the system in a yyyy-MM-dd format (for example, 2024-01-24). |

| DateFormat:format | Evaluates to the current datetime of the system in the specified format (format). See サンプル日付フォーマット for the available datetime formats |

| Vault:vaultitem | Evaluates to the value of the specified vault item. |

Examples

Some macros, such as %Ext% and %ShortDate%, do not require an argument, but others do. All macros that take an argument use the following syntax: %Macro:argument%

Here are some examples of the macros that take an argument:

- %Header:headername%: Where

headernameis the name of a header on a message. - %Header:mycustomheader% resolves to the value of the

mycustomheaderheader set on the input message. - %Header:ponum% resolves to the value of the

ponumheader set on the input message. - %RegexFilename:pattern%: Where

patternis a regex pattern. For example,%RegexFilename:^([\w][A-Za-z]+)%matches and resolves to the first word in the filename and is case insensitive (test_file.xmlresolves totest). - %Vault:vaultitem%: Where

vaultitemis the name of an item in the vault. For example,%Vault:companyname%resolves to the value of thecompanynameitem stored in the vault. - %DateFormat:format%: Where

formatis an accepted date format (see サンプル日付フォーマット for details). For example,%DateFormat:yyyy-MM-dd-HH-mm-ss-fff%resolves to the date and timestamp on the file.

You can also create more sophisticated macros, as shown in the following examples:

- Combining multiple macros in one filename:

%DateFormat:yyyy-MM-dd-HH-mm-ss-fff%%EXT% - Including text outside of the macro:

MyFile_%DateFormat:yyyy-MM-dd-HH-mm-ss-fff% - Including text within the macro:

%DateFormat:'DateProcessed-'yyyy-MM-dd_'TimeProcessed-'HH-mm-ss%