Google BigQuery は、分析用のフルマネージドクラウドデータウェアハウスです。Google BigQuery は、Google Storage と連携して大規模なデータセットをインタラクティブに分析することができ、テラバイトを数秒、ペタバイトを数分でスキャンすることができます。

Google BigQuery as Analytical Storage

CALL SYSADMIN.createConnection('dwh','bigquery','projectId=XXXX,[email protected],password=$${jboss.server.config.dir}/../deployments/bigquery.p12') ;;CALL SYSADMIN.createDatasource('dwh','bigquery','importer.schemaPattern=dataset_name,importer.defaultSchema=dataset_name,importer.useFullSchemaName=false,importer.useCatalogName=false','supportsNativeQueries=true,uploadMode=CLOUDSTORAGE,bucketName=dv_upload_bucket,useDdl=false') ;;CALL SYSADMIN.setCurrentDWH('dwh', 'dataset_name') ;;Please note that Google BigQuery does not support recommended indexes.

Type Name

bigquery

Connection Properties

テンプレート名:bigquery

適切なトランスレータ名: bigquery

プロパティ:

projectId(必須)transformQuery(デフォルト:TRUE);(廃止)credentialFactory(デフォルト:com.datavirtuality.dv.core.oauth.credential.BigQueryOAuthCredentialFactory)allowLargeResults (obsolete)largeResultsDefaultDatasettableMaxResultsfetchSizerefreshTokenaccessTokenexpirationTimeMillisecondsregionauthCoderedirectUriuser-name(必須)password(デフォルト: 空)ClientIdClientSecretdriver(デフォルト:bigquery)driver-class(デフォルト:com.datavirtuality.jdbc.bq.BQDriver)storageProjectId(デフォルト:空)storageUser(デフォルト:空)storagePassword(デフォルト:空)new-connection-sqlcheck-valid-connection-sql(デフォルト:select 1)min-pool-size(デフォルト:2)max-pool-size(デフォルト:70)readTimeout(デフォルト:20000、0は無限大、負数はデフォルト)connectTimeout20000、0は無限大、負数はデフォルト)

以下はその例です:

CALL SYSADMIN.createConnection('bq2','bigquery','projectId=XXXX,[email protected],password=$${jboss.server.config.dir}/../deployments/bigquery.p12') ;;CALL SYSADMIN.createDatasource('bq2','bigquery','importer.useFullSchemaName=false,importer.useCatalogName=false','supportsNativeQueries=true,uploadMode=CLOUDSTORAGE,bucketName=dv_upload_bucket,useDdl=false') ;;以下の理由から、BigQuery データソースを作成する際にはbucketName を指定することを強くお勧めします:

- 複数のデータ挿入を実行している間に超過する可能性のあるバケット作成制限。詳しくはGoogle Cloudstorage documentation を参照してください;

- データの挿入時にエラーが発生する可能性があるBigQuery データセットと一時バケット間のリージョンの不一致。詳細はGoogle BigQuery documentation を参照してください。

Translator Properties

Translator Properties Shared by All JDBC Connectors

(アルファベット順)

To view the full table, click the expand button in its top right corner

Name | Description | Default value |

|---|---|---|

| Sets a template to convert Examples comparisonStringConversion=%s-- no conversion will be appliedcomparisonStringConversion=binary %s-- WHERE binary someStringExpression LIKE someOtherStringExpressioncomparisonStringConversion=(%s COLLATE Latin1_General_CS_AS)-- WHERE (someStringExpression COLLATE Latin1_General_CS_AS LIKE someOtherStringExpression) |

|

| Database time zone, used when fetching date, time, or timestamp values | System default time zone |

| Specific database version, used to fine-tune pushdown support | Automatically detected by the server through the data source JDBC driver, if possible |

| Only considered when |

|

| If |

|

| Maximum size of prepared insert batch |

|

| Sets a template to convert Examples OrderByStringConversion=%s-- no conversion will be appliedOrderByStringConversion=(binary %s)-- ORDER BY (binary someStringExpression)OrderByStringConversion=cast((%s) as varchar(2000) ccsid 1208)-- ORDER BY cast((someStringExpression) as varchar(2000) ccsid 1208) |

|

| If |

|

| Forces a translator to issue a Example SELECT x.* FROM table ( CALL "dwh.native"("request" => 'SELECT query, pid, elapsed, substring FROM svl_qlog ORDER BY STARTTIME DESC LIMIT 200') ) w , ARRAYTABLE( w.tuple COLUMNS query string, pid integer , elapsed string, "substring" string ) x; |

|

| If If |

|

| If If |

|

| If |

|

| if |

|

| Embeds a / comment / leading comment with session/request id in the source SQL query for informational purposes |

|

The names of the translator properties are case-sensitive.

Translator Properties Specific for Google BigQuery

To view the full table, click the expand button in its top right corner

Name | Description | Default value |

|---|---|---|

| String property. If set, the translator replaces all null characters in strings before executing | Single space |

| Values:

|

|

| Value: bucket name Only for the default ( | |

| Value: bucket prefix Only for the default ( | |

| Value: name of a folder in a bucket Only for the default ( | |

| Value: boolean If |

|

| If set to If set to |

|

Data Source Properties

Data Source Properties Shared by All JDBC Connectors

(アルファベット順)

To view the full table, click the expand button in its top right corner

Name | Description | Default |

|---|---|---|

| Replaces |

|

| Database catalogs to use. Can be used if the Only for Microsoft SQL Server and Snowflake:

| Exasol: EXA_DBAll others: empty |

importer.defaultCatalog |

| |

|

Please note that writing into a data source is only possible if this parameter is set. | Empty |

| Turns on metadata cache for a single data source even when the global option is turned off. Together with |

|

| Case-insensitive regular expression that will exclude a matching fully qualified procedure name from import | Empty |

| Comma-separated list of schemas (no | Oracle: APEX_PUBLIC_USER,DIP,FLOWS_040100,FLOWS_020100,FLOWS_FILES,MDDATA,ORACLE_OCM,SPATIAL_CSW_ADMIN_USR,SPATIAL_WFS_ADMIN_USR,XS$NULL,BI,HR,OE,PM,IX,SH,SYS,SYSTEM,MDSYS,CTXSYSAll others: empty |

| Case-insensitive regular expression that will exclude a matching fully qualified table name from import. Does not speed up metadata loading. Here are some examples: 1. Excluding all tables in the (source) schemas importer.excludeTables=(.*[.]sys[.].*|.*[.]INFORMATION_SCHEMA[.].*)2. Excluding all tables except the ones starting with "public.br" and "public.mk" using a negative lookahead: importer.excludeTables=(?!public\.(br|mk)).*3. Excluding "tablename11" from the list ["tablename1", "tablename11", "company", "companies"]: importer.excludeTables=.*\.(?!\btablename1\b|\bcompan).*

| Empty |

| Fetch size assigned to a resultset on loading metadata | No default value |

| If set to |

|

| If set to |

|

| If set to |

|

| If set to Please note that it is currently not possible to import procedures which use the same name for more than one parameter (e.g. same name for |

|

| Set to |

|

| If set to |

|

| If set to |

|

| Procedure(s) to import. If omitted, all procedures will be imported. | Empty |

| If set to |

|

| If set to |

|

| If set to |

|

| If set to |

|

| Schema(s) to import. If omitted or has "" value, all schemas will be imported. | Empty |

| If set to |

|

| Table(s) to import. If omitted, all tables will be imported. | Empty |

| Comma-separated list (without spaces) of table types to import. Available types depend on the DBMS. Usual format: Other typical types are | Empty |

| If set to | |

| If set to Please note that this may lead to objects with duplicate names when importing from multiple schemas, which results in an exception |

|

| If set to |

|

| If set to |

|

Creating/Dropping Tables and Inserting Data

BigQeury データソースにテーブルを作成 / 削除してデータを挿入するには、importer.defaultSchema データソースプロパティをターゲットデータセットの名前に設定する必要があります。以下はその例です:

CALL SYSADMIN.createConnection(name => 'bq', jbossCliTemplateName => 'bigquery', connectionOrResourceAdapterProperties => 'projectId=XXXX,[email protected],password=PATH_TO_KEY_FILE') ;;CALL SYSADMIN.createDatasource(name => 'bq', translator => 'bigquery', modelProperties => 'importer.useCatalogName=false,importer.defaultSchema=TARGET_DATA_SET,importer.schemaPattern=SCHEMA_PATTERN_INCLUDING_TARGET_DATASET,importer.useFullSchemaName=false') ;;Segmenting with Partitioned Tables

BigQuery のパーティショニング機能は、API モードとDDL(useDdl=TRUE)モードの両方でサポートされています。

パーティショニングはOPTIONS 句でサポートされます。

Types of Partitioning

Integer range partitioning

特定の INTEGER カラムの値の範囲に基づいてテーブルをパーティショニングできます。

Time-unit column partitioning

テーブル内の DATE またはTIMESTAMP カラムでテーブルをパーティショニングできます。

TIMESTAMP カラムの場合、パーティションは時間単位、日単位、月単位、年単位のいずれかになります。 DATE カラムの場合、パーティションは日、月、年の粒度を持つことができます。パーティションの境界はUTC 時間に基づいています。

Ingestion time partitioning

DDL モードでのみ有効です。

インジェスチョンタイムパーティションテーブルには、 _PARTITIONTIME という名前の疑似カラムがあります。このカラムの値は、パーティション境界(1時間ごとや1日ごとなど)で切り捨てられた、各行の取り込み時間です。 _PARTITIONTIME を使う代わりに、 _PARTITIONDATE を使うこともできます。 _PARTITIONDATE 擬似カラムには、 _PARTITIONTIME 擬似カラムの値に対応するUTC 日付が含まれます。

パーティションの粒度は、時間単位、日単位、月単位、年単位から選択できます。

Partitioning Options

partition_expiration_days

取り込み時間または時間単位カラムでパーティショニングされたテーブルを作成する場合、パーティションの有効期限を指定できます。この設定は、BigQuery が各パーティションのデータを保持する期間を指定します。この設定はテーブル内のすべてのパーティションに適用されますが、パーティション時間に基づいてパーティションごとに独立して計算されます。

FLOAT の値を受け付けます。

require_partition_filter

このテーブルに対するクエリに、パーティショニングカラムでフィルタリングする述語フィルタを含める必要があるかどうかを指定します。 デフォルト値は FALSE です。

partition_expression

Partition_expression

| description | partitioning type | valid for mode |

|---|---|---|---|

| Partition by ingestion time with daily partitions | Ingestion time | DDL |

| Equivalent to | Ingestion time | DDL |

| Partition by the | Time-unit column | API/DDL |

| Partition by the | Time-unit column | API/DDL |

| Partition by the | Time-unit column | API/DDL |

| Partition by the | Time-unit column | DDL |

| Partition by ingestion time with the specified partitioning type | Ingestion time | DDL |

| Partition by the | Time-unit column | API/DDL |

| Partition by an integer column with the specified range, where:

| Integer range | API/DDL |

Examples

1. 日単位の粒度でDATE カラムでパーティショニングし、パーティションに3日間のデータを保持し、テーブルからクエリを実行するためにパーティショニングカラムでフィルタ処理するフィルタが必要です。つまり、SELECT クエリにはDATE カラムを含むWhere 句が含まれている必要があります:

CREATE TABLE bigquery.partition1 (i INTEGER, d DATE, s STRING) OPTIONS (partition_by 'd',partition_expiration_days '3', require_partition_filter 'true') ;;2. 月単位の粒度でタイムスタンプ範囲にパーティショニングされたテーブルを作成する:

CREATE TABLE bigquery_ddl.partition_ddl_date (i integer, d timestamp, s string) OPTIONS (partition_by 'DATE_TRUNC(d, MONTH)') ;;3. 整数範囲でパーティショニングされたテーブルを作成するには、テーブルからのクエリに対してパーティションカラムフィルタ処理するフィルタが必要です。つまり、SELECT クエリには、INTEGER カラムを含むWhere 句が必要です:

CREATE TABLE bigquery_ddl.partition_ddl_integer (i integer, d timestamp, s string) OPTIONS (partition_by 'RANGE_BUCKET(i, GENERATE_ARRAY(0, 100, 10))', require_partition_filter 'true') ;;Partitioning options and integer-range, date-unit partitioning for API mode are available since v4.10

Clustering

クラスタリングはOPTIONS 句でサポートされます:

CREATE TABLE bigquery.cluster (i integer, d date, ts timestamp, s string, b boolean) OPTIONS (cluster_by 'i,d,ts,s') ;;Partitioning and Clustering for Materialization of Recommended Optimizations

BigQuery のマテリアライズドテーブルは、SYSADMIN.createCreationParam プロシージャを使用してパーティショニングおよびクラスタリングできます。

パラメータキー:

partition_bycluster_bypartition_expiration_daysrequire_partition_filter

Example

-- Add "partition_by" creation parameterCALL "SYSADMIN.createCreationParam"("recOptId" => 1,"paramKey" => 'partition_by',"paramValue" => 'RANGE_BUCKET(i, GENERATE_ARRAY(0, 100, 10))');;-- Add "require_partition_filter" creation parameterCALL "SYSADMIN.createCreationParam"("recOptId" => 1,"paramKey" => 'require_partition_filter',"paramValue" => true);;ID が1に等しい推奨される最適化では、次の例のようにパーティショニングされたマテリアライズドテーブルが作成されます:

CREATE TABLE bigquery_ddl.partition_ddl_integer (i integer, d timestamp, s string) OPTIONS (partition_by 'RANGE_BUCKET(i, GENERATE_ARRAY(0, 100, 10))', require_partition_filter 'true') ;;Configuring 3-legged OAuth for BigQuery

BigQuery で3-legged OAuth を設定するには、以下の手順に従います:

1. BigQuery プロジェクトのDeveloper Console にログインします。

2. 新しいOAuth2 認証情報(CLIENT_ID とCLIENT_SECRET キーのペア)を生成します。

3. 以下のリンクの CLIENT_ID を自分の CLIENT_ID に置き換え、ブラウザでこのリンクにアクセスします:

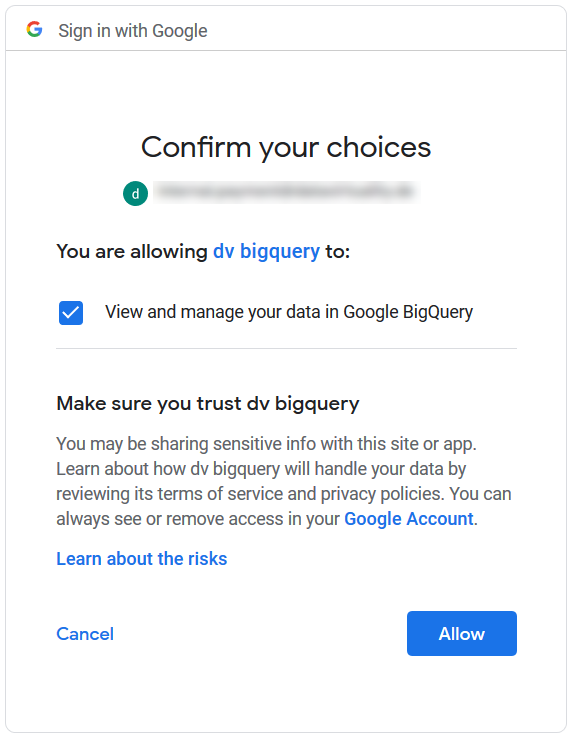

4. 'Allow' を選択してアクセスを許可します:

5. 存在しないページにリダイレクトされますが、これは正しいです。ページのURL をコピーしてコードを取得します:

![]() OAuth コードは10分間のみ有効です。

OAuth コードは10分間のみ有効です。

|

6. ステップ3 で取得したCLIENT_ID とCLIENT_SECRET、ステップ5 で取得したAUTH_CODE、BigQuery プロジェクトのPROJECT_ID を使用してデータソースを作成します。redirectUrl は、ステップ3 と5 と同じである必要があります:

CALL SYSADMIN.createConnection('bq3legged','bigquery','authType=OAUTH,projectId=PROJECT_ID ,authCode=AUTH_CODE ,redirectUri=http%3A%2F%2Flocalhost%3A9000%2Fredirect.html%3Fto%3Dconnect/bigquery/oauth,user-name=CLIENT_ID,password=CLIENT_SECRET') ;;CALL SYSADMIN.createDatasource('bq3legged','bigquery','importer.schemaPattern=bq_schema_pattern,importer.defaultSchema=bq_schema, importer.useFullSchemaName=false,importer.useCatalogName=false','supportsNativeQueries=true') ;;See Also

Partitioning Tables in BigQuery パーティショニングされていないテーブルをパーティショニングされたテーブルに変換してクエリのパフォーマンスを向上させる方法を学ぶには、こちらをご覧ください。